This is what emerged from a study by GARTNER: after five years of hearing only about the CLOUD, there was therefore a turnaround with the introduction of a new technological trend.

Perhaps the COVID emergency has slowed down this process, but the direction taken is still clear and linked to some "limitations" that have emerged with the implementation of industrial data management projects in the cloud.

Any examples? It is soon said…

- Even with the arrival of the performance However, 5G connectivity is a bottleneck (o “single point of failure” as Americans like to say): supervision, whether it is a machine, a line or a plant, for some processes is too important to risk losing it and the only solution for now is still to have it reside on the plant.

- La amount of data that is generated by a process process it can hardly be stored and managed in the CLOUD in its entirety: it is not a problem of performance (no longer) but rather of costs. Both the storage and the subsequent analysis of industrial BIG DATA would require space and computing power disproportionate to the possibilities of PMIU but also of multinational companies.

So what is the solution? YES CLOUD? NO CLOUD?

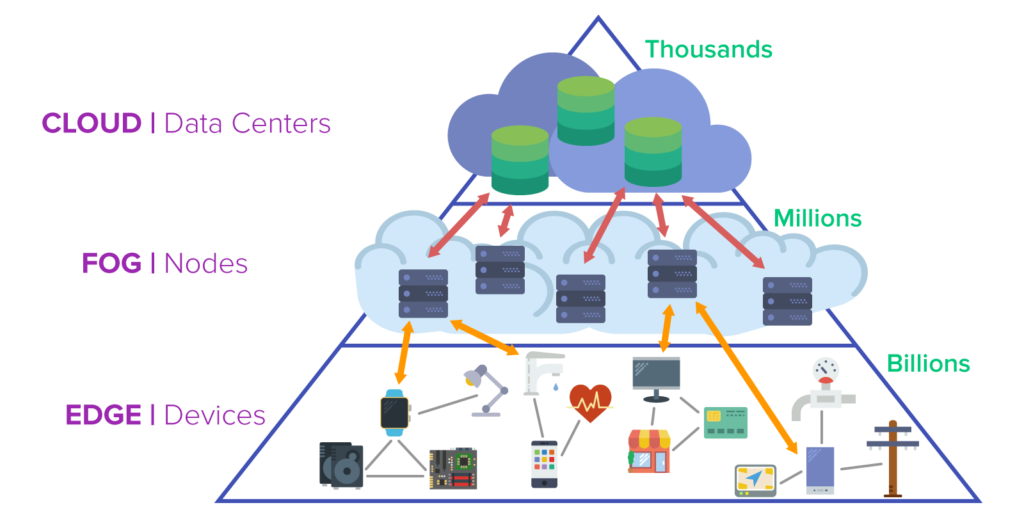

Obviously the correct answer lies in the middle: the CLOUD must undoubtedly be used but we must supply the cloud not with raw data (RAW DATA, of which we know industrial plants are large producers), but with already elaborated information ready to carry out second-level analyses, benchmarking , etc…

This is exactly what the GARTNER study refers to: an intermediate step is needed between the production plants and the enterprise network. No let's talk more di simple boxes that simply act as a bridge, but of devices that can give certainties on the topics listed below.

- High performance (of storage and management of virtual machines for ICS systems): high performance about capacity of computing and storage in order to "host" inside both the SUPERVISION SYSTEM that the PROCESS DATABASE. In this way it is possible to send already “processed” and useful data to the CLOUD for an advanced analysis.

- High Availability (a level of redundancy that guarantees a UPTIME greater than 99,99%): architectures capable of guaranteeing HIGH AVAILABILITY (and in some cases FAULT TOLERANCE) and keeping supervision available (and its visualization via client)

- A secure connection to the CLOUD: these are devices designed to let only authorized data and commands go out (and enter). So we're talking about an active filter both incoming and outgoing, useful for protecting both systems and architectures in the CLOUD.

- A point of IT/OT CONVERGENCE reliable and easily manageable: theIT is already familiar with the EDGE concept, but often little knowledge about what is underneath (PLC, SCADA and ICS systems in general).

With these devices it is possible to create a unique connection and provide "upper floors" INFORMATION instead of PURE DATA (RAW); moreover they are easily replaceable, it is enough to always have a backup of the applications used (and with some products such as the ztC of Stratus you don't even need that as they align completely automatically)

Do you want to deepen the topic to find out what the architectures of tomorrow will be like?

Download this totally free White Paper!

Click here