IoT is simple: It's simple to understand, it's simple to install, and it's simple to use.

I buy a bracelet, download the App and by the end of the day I'll have my electrocardiogram, the kilometers traveled and the minutes I've spent sitting.

The IIoT is a little more complex: we are no longer in the consumer sphere and therefore how the data is produced, historicized, analyzed and returned is no longer the result of a personal choice, but is closely linked to the dynamics, policies and best practices of a company, an institution or of a specific industrial sector.

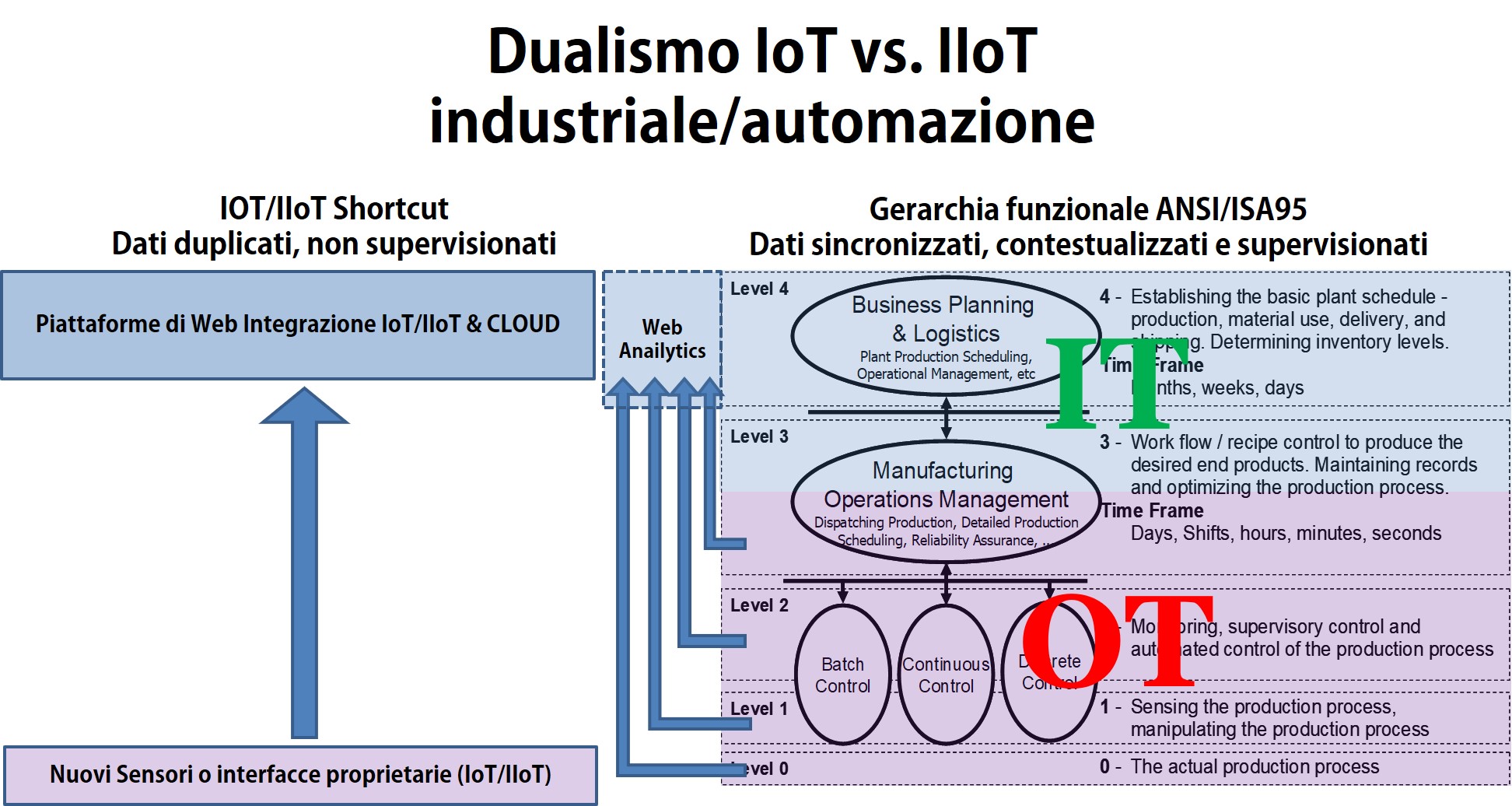

In practice, if in the B2C world the connection between the physical world and the cloud is direct (as represented in the figure on the left side), in an industrial environment or in Utility applications it is difficult to "undermine" an existing structure or hierarchies established during the creation and evolution of the process.

So they exist two ways to implement an application or a process with the I(I)oT and it is not said that they cannot be carried out simultaneously: in fact today what matters most for the continuous improvement of quality and efficiency and the VISIBILITY' on the process and especially the use of Raw Data.

It is known that most of the production data (and not only) are not then reused to carry out analyses: this is a paradox that thanks to the potential donated by the Cloud in relation to historicization and analysis capabilities (and above all to the elasticity of costs of these operations in the cloud) is changing fast.

Therefore, if the Cloud offers great opportunities for those who want to investigate the causes and effects of their systems, on the other hand a problem is created related to how data from areas that are often deliberately isolated (not connected) are "made available" in the past.

IN SIMPLE WORDS: HOW DO I EXTRAPOLATE AS MANY DATA AS POSSIBLE FROM A SYSTEM WITHOUT TOUCHING IT?

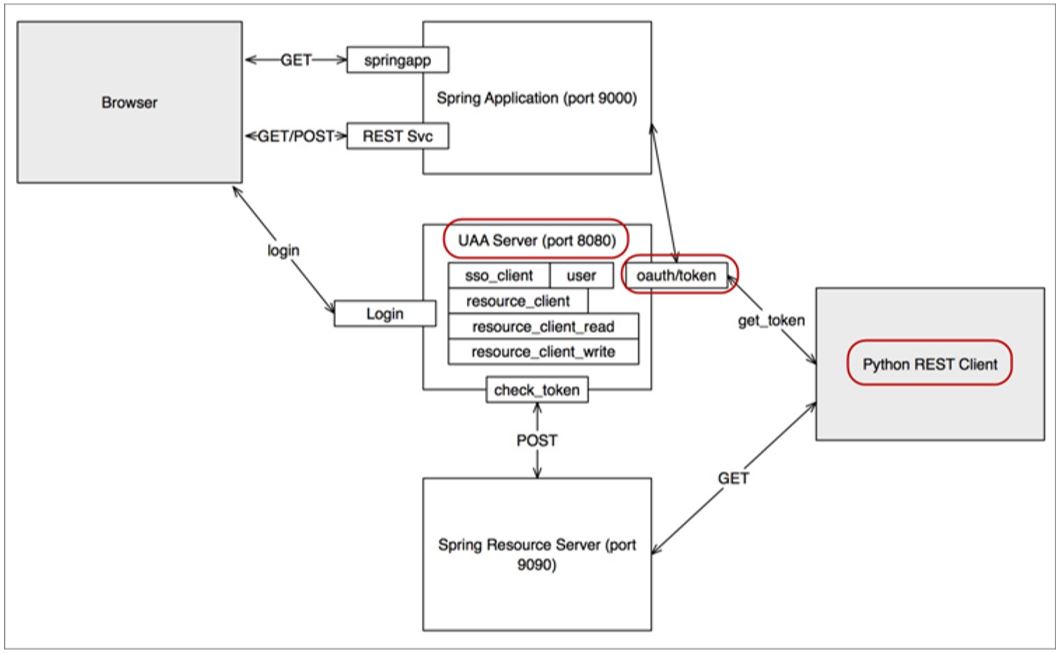

The answer to this question is the REST API.

Le REST API (obviously if set well) are reliable and safe because they do not influence the production process in any way and give the possibility to create the perfect BRIDGE to the Cloud: we work in parallel and for this reason new vulnerabilities are not created (compared to those that already existed…)

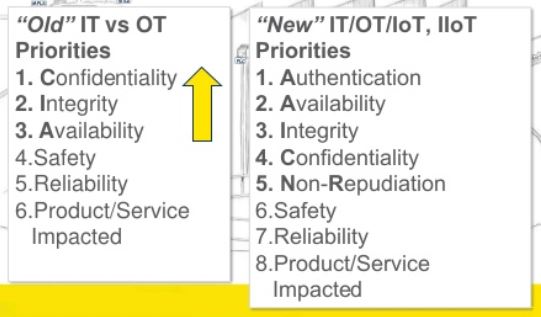

It is important today to evaluate solutions through which data KEEP THE REQUIREMENTS THAT DEFINE THEM "SAFE" (confidential, intact, available + authenticated and non-repudiable), and in this perspective it is the PDBs (Process Databases or process loggers) that must guarantee all these characteristics and AT THE SAME TIME MAKING THE DATA AVAILABLE TO THOSE WHO CAN DRAW VALUE FROM IT.

GE Digital's Historian does just that: it historicizes with precision and reliability and gives the possibility to create REST APIs with the following characteristics.

Public REST API

Historian 7.0 includes a fully supported Public REST API, including:

• Separate Historian REST API Reference Guide with documented examples and troubleshooting techniques.

• Full exposure to Historian tag functionality.

• API is included in the Installer.

• Retrieval based on all Historian query modes, including calculated modes.

• Support for the UAA token.

• Sample code in cURL